The Large Hadron Collider is the world’s largest proton collider, and in a mere five years of active data acquisition, it has already achieved fame for the discovery of the elusive Higgs Boson in 2012. Though the LHC is currently off to allow for a series of repairs and upgrades, it is scheduled to begin running again within the month, this time with a proton collision energy of 13 TeV. This is nearly double the previous run energy of 8 TeV, opening the door to a host of new particle productions and processes. Many physicists are keeping their fingers crossed that another big discovery is right around the corner. Here are a few specific things that will be important in Run II.

1. Luminosity scaling

Though this is a very general category, it is a huge component of the Run II excitement. This is simply due to the scaling of luminosity with collision energy, which gives a remarkable increase in discovery potential for the energy increase.

If you’re not familiar, luminosity is the number of events per unit time and cross sectional area. Integrated luminosity sums this instantaneous value over time, giving a metric in the units of 1/area.

In the particle physics world, luminosities are measured in inverse femtobarns, where 1 fb-1 = 1/(10-43 m2). Each of the two main detectors at CERN, ATLAS and CMS, collected 30 fb-1 by the end of 2012. The main point is that more luminosity means more events in which to search for new physics.

Figure 1 shows the ratios of LHC luminosities for 7 vs. 8 TeV, and again for 13 vs. 8 TeV. Since the plot is in log scale on the y axis, it’s easy to tell that 13 to 8 TeV is a very large ratio. In fact, 100 fb-1 at 8 TeV is the equivalent of 1 fb-1 at 13 TeV. So increasing the energy by a factor less than 2 increase the integrated luminosity by a factor of 100! This means that even in the first few months of running at 13 TeV, there will be a huge amount of data available for analysis, leading to the likely release of many analyses shortly after the beginning of data acquisition.

2. Supersymmetry

Supersymmetry theory proposes the existence of a superpartner for every particle in the Standard Model, effectively doubling the number of fundamental particles in the universe. This helps to answer many questions in particle physics, namely the question of where the particle masses came from, known as the ‘hierarchy’ problem (see the further reading list for some good explanations.)

Current mass limits on many supersymmetric particles are getting pretty high, concerning some physicists about the feasibility of finding evidence for SUSY. Many of these particles have already been excluded for masses below the order of a TeV, making it very difficult to create them with the LHC as is. While there is talk of another LHC upgrade to achieve energies even higher than 14 TeV, for now the SUSY searches will have to make use of the energy that is available.

Figure 2 shows the cross sections for various supersymmetric particle pair production, including squark (the supersymmetric top quark) and gluino (the supersymmetric gluon). Given the luminosity scaling described previously, these cross sections tell us that with only 1 fb-1, physicists will be able to surpass the existing sensitivity for these supersymmetric processes. As a result, there will be a rush of searches being performed in a very short time after the run begins.

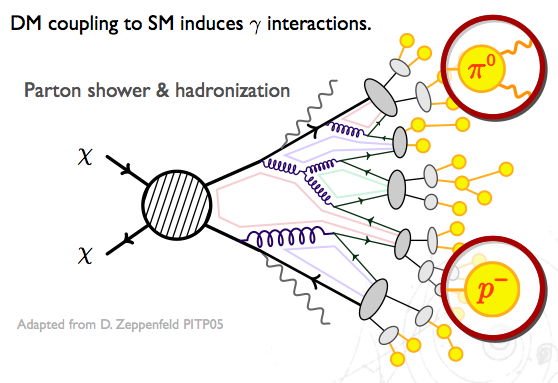

3. Dark Matter

Dark matter is one of the greatest mysteries in particle physics to date (see past particlebites posts for more information). It is also one of the most difficult mysteries to solve, since dark matter candidate particles are by definition very weakly interacting. In the LHC, potential dark matter creation is detected as missing transverse energy (MET) in the detector, since the particles do not leave tracks or deposit energy.

One of the best ways to ‘see’ dark matter at the LHC is in signatures with mono-jet or photon signatures; these are jets/photons that do not occur in pairs, but rather occur singly as a result of radiation. Typically these signatures have very high transverse momentum (pT) jets, giving a good primary vertex, and large amounts of MET, making them easier to observe. Figure 3 shows a Feynman diagram of such a decay, with the MET recoiling off a jet or a photon.

Though the topics in this post will certainly be popular in the next few years at the LHC, they do not even begin to span the huge volume of physics analyses that we can expect to see emerging from Run II data. The next year alone has the potential to be a groundbreaking one, so stay tuned!

References:

- “Parton Luminosity and Cross Section Plots”, James Stirling, Imperial College London

- arXiv:1407.5066

- “Hunting for the Invisible”, Kerstin Hoepfner, RWTH Aachen, III. Phys. Inst. A

Further Reading:

- Theorist Matt Strassler on supersymmetry and the hierarchy problem

- Lessons for SUSY from the LHC after the first run: 1404.7191

- Nature on the subject of dark matter