Every year since 1966, particle physicists have gathered in the Alps to unveil and discuss their most important results of the year (and to ski). This year I had the privilege to attend the Moriond QCD session so I thought I would post a recap here. It was a packed agenda spanning 6 days of talks, and featured a lot of great results over many different areas of particle physics, so I’ll have to stick to the highlights here.

FASER Observes First Collider Neutrinos

Perhaps the most exciting result of Moriond came from the FASER experiment, a small detector recently installed in the LHC tunnel downstream from the ATLAS collision point. They announced the first ever observation of neutrinos produced in a collider. Neutrinos are produced all the time in LHC collisions, but because they very rarely interact, and current experiments were not designed to look for them, no one had ever actually observed them in a detector until now. Based on data collected during collisions from last year, FASER observed 153 candidate neutrino events, with a negligible amount of predicted backgrounds; an unmistakable observation.

This first observation opens the door for studying the copious high energy neutrinos produced in colliders, which sit in an energy range currently unprobed by other neutrino experiments. The FASER experiment is still very new, so expect more exciting results from them as they continue to analyze their data. A first search for dark photons was also released which should continue to improve with more luminosity. On the neutrino side, they have yet to release full results based on data from their emulsion detector which will allow them to study electron and tau neutrinos in addition to the muon neutrinos this first result is based on.

New ATLAS and CMS Results

The biggest result from the general purpose LHC experiments was ATLAS and CMS both announcing that they have observed the simultaneous production of 4 top quarks. This is one of the rarest Standard Model processes ever observed, occurring a thousand times less frequently than a Higgs being produced. Now that it has been observed the two experiments will use Run-3 data to study the process in more detail in order to look for signs of new physics.

ATLAS also unveiled an updated measurement of the mass of the W boson. Since CDF announced its measurement last year, and found a value in tension with the Standard Model at ~7-sigma, further W mass measurements have become very important. This ATLAS result was actually a reanalysis of their previous measurement, with improved PDF’s and statistical methods. Though still not as precise as the CDF measurement, these improvements shrunk their errors slightly (from 19 to 16 MeV). The ATLAS measurement reports a value of the W mass in very good agreement with the Standard Model, and approximately 4-sigma in tension with the CDF value. These measurements are very complex, and work is going to be needed to clarify the situation.

CMS had an intriguing excess (2.8-sigma global) in a search for a Higgs-like particle decaying into an electron and muon. This kind of ‘flavor violating’ decay would be a clear indication of physics beyond the Standard Model. Unfortunately it does not seem like ATLAS has any similar excess in their data.

Status of Flavor Anomalies

At the end of 2022, LHCb announced that the golden channel of the flavor anomalies, the R(K) anomaly, had gone away upon further analysis. Many of the flavor physics talks at Moriond seemed to be dealing with this aftermath.

Of the remaining flavor anomalies, R(D), a ratio describing the decay rates of B mesons in final states with D mesons and taus versus D mesons plus muons or electrons, has still been attracting interest. LHCb unveiled a new measurement that focused on hadronically taus and found a value that agreed with the Standard Model prediction. However this new measurement had larger error bars than others so it only brought down the world average slightly. The deviation currently sits at around 3-sigma.

An interesting theory talk pointed out that essentially any new physics which would produce a deviation in R(D) should also produce a deviation in another lepton flavor ratio, R(Λc), because it features the same b->clv transition. However LHCb’s recent measurement of R(Λc) actually found a small deviation in the opposite direction as R(D). The two results are only incompatible at the ~1.5-sigma level for now, but it’s something to continue to keep an eye on if you are following the flavor anomaly saga.

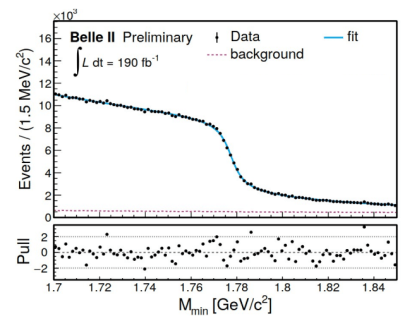

It was nice to see that the newish Belle II experiment is now producing some very nice physics results. The highlight of which was a world-best measurement of the mass of the tau lepton. Look out for more nice Belle II results as they ramp up their luminosity, and hopefully they can weigh in on the R(D) anomaly soon.

Theory Pushes for Precision

The focus of much of the theory talks was about trying to advance our precision in predictions of standard model physics. This ‘bread and butter’ physics is sometimes overlooked in scientific press, but is an absolutely crucial part of the particle physics ecosystem. As experiments reach better and better precision, improved theory calculations are required to accurately model backgrounds, predict signals, and have precise standard model predictions to compare to so that deviations can be spotted. Nice results in this area included evidence for an intrinsic amount of charm quarks inside the proton from the NNPDF collaboration, very precise extraction of CKM matrix elements by using lattice QCD, and two different proposals for dealing with tricky aspects regarding the ‘flavor’ of QCD jets.

Final Thoughts

Those were all the results that stuck out to me. But this is of course a very biased sampling! I am not qualified enough to point out the highlights of the heavy ion sessions or much of the theory presentations. For a more comprehensive overview, I recommend checking out the slides for the excellent experimental and theoretical summary talks. Additionally there was the Moriond Electroweak conference that happened the week before the QCD one, which covers many of the same topics but includes neutrino physics results and dark matter direct detection. Overall it was a very enjoyable conference and really showcased the vibrancy of the field!