Author: Alberto Garfagnini

Published: arXiv:1408.2455 [hep-ex]

Neutrinoless double beta decay is a theorized process that, if observed, would provide evidence that the neutrino is its own antiparticle. The relatively recent discovery of neutrino mass from oscillation experiments makes this search particularly relevant, since the Majorana mechanism that requires particles to be self-conjugate can also provide mass. A variety of experiments based on different techniques hope to observe this process. Before providing an experimental overview, we first discuss the theory itself.

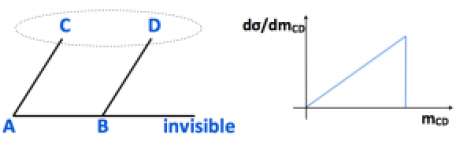

Beta decay occurs when an electron or positron is released along with a corresponding neutrino. Double beta decay is simply the simultaneous beta decay of two neutrons in a nucleus. “Neutrinoless,” of course, means that this decay occurs without the accompanying neutrinos; in this case, the two neutrinos in the beta decay annihilate with one another, which is only possible if they are self-conjugate. Figures 1 and 2 demonstrate the process by formula and image, respectively.

The lack of accompanying neutrinos in such a decay violates lepton number, meaning this process is forbidden unless neutrinos are Majorana fermions. Without delving into a full explanation, this simply means that a particle is its own antiparticle (though more information is given in the references.) The importance lies in the lepton number of a neutrino. Neutrinoless double beta decay would require a nucleus to absorb two neutrinos, then decay into two protons and two electrons (to conserve charge). The only way in which this process does not violate lepton number is if the lepton charge is the same for a neutrino and an antineutrino; in other words, if they are the same particle.

The experiments currently searching for neutrinoless double beta decay can be classified according to the material used for detection. A partial list of active and future experiments is provided below.

1. EXO (Enriched Xenon Observatory): New Mexico, USA. The detector is filled with liquid 136Xe, which provides worse energy resolution than gaseous xenon, but is compensated by the use of both scintillating and ionizing signals. The collaboration finds no statistically significant evidence for 0νββ decay, and place a lower limit on the half life of 1.1 * 1025 years at 90% confidence.

2. KamLAND-Zen: Kamioka underground neutrino observatory near Toyama, Japan. Like EXO, the experiment uses liquid xenon, but in the past has required purification due to aluminum contaminations in the detector. They report a 0νββ half life 90% CL at 2.6 * 1025 years. Figure 3 shows the energy spectra of candidate events with the best fit background.

3. GERDA (Germanium Dectetor Array): Laboratori Nazionali del Gran Sasso, Italy. GERDA utilizes High Purity 76Ge diodes, which provide excellent energy resolution but typically have very large backgrounds. To prevent signal contamination, GERDA has ultra-pure shielding that protect measurements from environmental radiation background sources. The half life is bound below at 90% confidence by 2.1 * 1025 years.

4. MAJORANA: South Dakota, USA. This experiment is under construction, but a prototpye is expected to begin running in 2014. If results from GERDA and MAJORANA look good, there is talk of building a next generation germanium experiment that combines diodes from each detector.

5. CUORE: Laboratori Nazionali del Gran Sasso, Italy. CUORE is a 130Te bolometric direct detector, meaning that it has two layers: an absorber made of crystal that releases energy when struck, and a sensor which detects the induced temperature changes. The experiment is currently under construction, so there are no definite results, but it expects to begin taking data in 2015.

While these results do not seem to show the existence of 0νββ decay, such an observation would demonstrate the existence of Majorana fermions and give an estimate of the absolute neutrino mass scale. However, a missing observation would be just as significant in the role of scientific discovery, since this would imply that the neutrino is not in fact its own antiparticle. To get a better limit on the half life, more advanced detector technologies are necessary; it will be interesting to see if MAJORANA and CUORE will have better sensitivity to this process.

Further Reading:

- “Evidence for Neutrino Mass: A Decade of Discovery”: arXiv:0412032

- “Recent Results in Neutrinoless Double Beta Decay”: arXiv:1305.3306

- “Dirac, Majorana, and Weyl Fermions”: arXiv:1006.1718